Spark provides APIs in Scala, Java, Python (PySpark) and R. We use PySpark and Jupyter, previously known as IPython Notebook, as the development environment. There are many articles online that talk about Jupyter and what a great tool it is, so we won’t introduce it in details here.

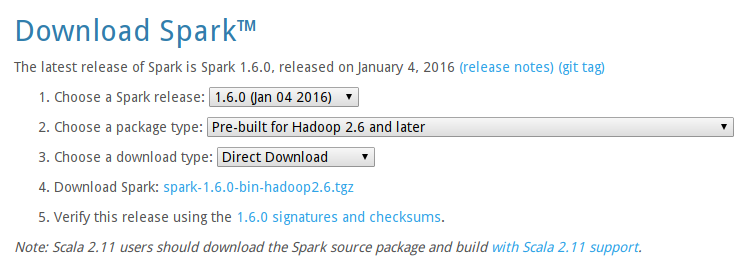

Download Spark

Get Spark pre-built package from the downloads page of the Spark project website.

Make sure you have java installed on your machine. Now unzip the downloaded package and put it in whatever directory you like. The last step is to define the path variable SPARK_HOME to the absolute path of this directory. We recommend adding this variable to your bash file (.bashrc on linux, .bash_profile on Mac) so that you that you only need to create it once.

tar -zxvf spark-2.1.0-bin-hadoop2.6.tgz

cd spark-2.1.0-bin-hadoop2.6

(on Ubuntu, Linux)

echo "export SPARK_HOME=`pwd`" >> ~/.bashrc

(on Mac)

echo "export SPARK_HOME=`pwd`" >> ~/.bash_profile

Close your terminal window. When you open your terminal again, everythign should now be setup.

First Spark application

Let’s now launch the PySpark shell in Jupyter Notebook. Assuming you have already installed jupyter and findspark the only thing left to do is to run jupyter with the command: jupyter notebook. Inside a jupyter python notebook, the following must always be included to run pyspark:

import findspark

findspark.init()

from pyspark import SparkContext

sc = SparkContext(master="local[4]")

In our first Spark application, we will run a Monte Carlo experiment to find an estimate for $\pi$.

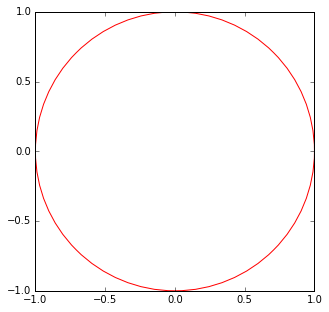

Here is how we are going to do it. The figure bellow shows a circle with radius $r = 1$ inscribed within a 2×2 square. The ratio between the area of the circle and the area of the square is $\frac{\pi}{4}$. If we sample enough points in the square, we will have approximately $\rho = \frac{\pi}{4}$ of these points that lie inside the circle. So we can estimate $\pi$ as $4 \rho$.

Run the PySpark shell, then open http://localhost:8888 with your favorite browser, then create a Python notebook.

First, we draw a sufficient amount of points inside the square.

import numpy as np

TOTAL = 1000000

dots = sc.parallelize([2.0 * np.random.random(2) - 1.0

for i in range(TOTAL)]) \

.cache()

print("Number of random points:", dots.count())

stats = dots.stats()

print('Mean:', stats.mean())

print('stdev:', stats.stdev())

Output:

Number of random points: 1000000

Mean: [ -4.66502953e-04 2.90346990e-05]

stdev: [ 0.57730389 0.57742223]

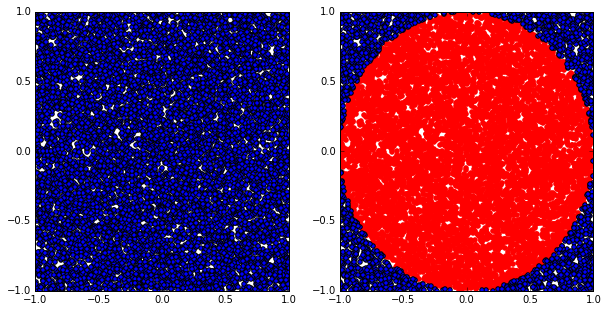

We can sample a small fraction of these points and visualize them.

%matplotlib inline

from operator import itemgetter

from matplotlib import pyplot as plt

plt.figure(figsize = (10, 5))

# Plot 1

plt.subplot(1, 2, 1)

plt.xlim((-1.0, 1.0))

plt.ylim((-1.0, 1.0))

sample = dots.sample(False, 0.01)

X = sample.map(itemgetter(0)) \

.collect()

Y = sample.map(itemgetter(1)) \

.collect()

plt.scatter(X, Y)

# Plot 2

plt.subplot(1, 2, 2)

plt.xlim((-1.0, 1.0))

plt.ylim((-1.0, 1.0))

inCircle = lambda v: np.linalg.norm(v) <= 1.0

dotsIn = sample.filter(inCircle) \

.cache()

dotsOut = sample.filter(lambda v: not inCircle(v)) \

.cache()

# inside circle

Xin = dotsIn.map(itemgetter(0)) \

.collect()

Yin = dotsIn.map(itemgetter(1)) \

.collect()

plt.scatter(Xin, Yin, color = 'r')

# outside circle

Xout = dotsOut.map(itemgetter(0)) \

.collect()

Yout = dotsOut.map(itemgetter(1)) \

.collect()

plt.scatter(Xout, Yout)

Finally, let’s compute the estimated value of $\pi$.

pi = 4.0 * (dots.filter(inCircle).count()*1.0 / TOTAL)

print("The estimation of \pi is:", pi)

Output:

The estimation of \pi is: 3.142604

Next step

I recommend reading Spark Programming Guide for next step. Besides, you can learn more example Spark programs on the Spark website.

References

- Spark official documents

- Example Python Spark programs on the Spark Github repository